code { word-break: normal; }

This blog post represents the Igalia compilers team;

all opinions expressed here are our own and may not reflect the opinions

of other members of the MessageFormat Working Group or any other group.

MessageFormat 2.0

is a new standard for expressing translatable messages in user interfaces,

both in Web applications and other software.

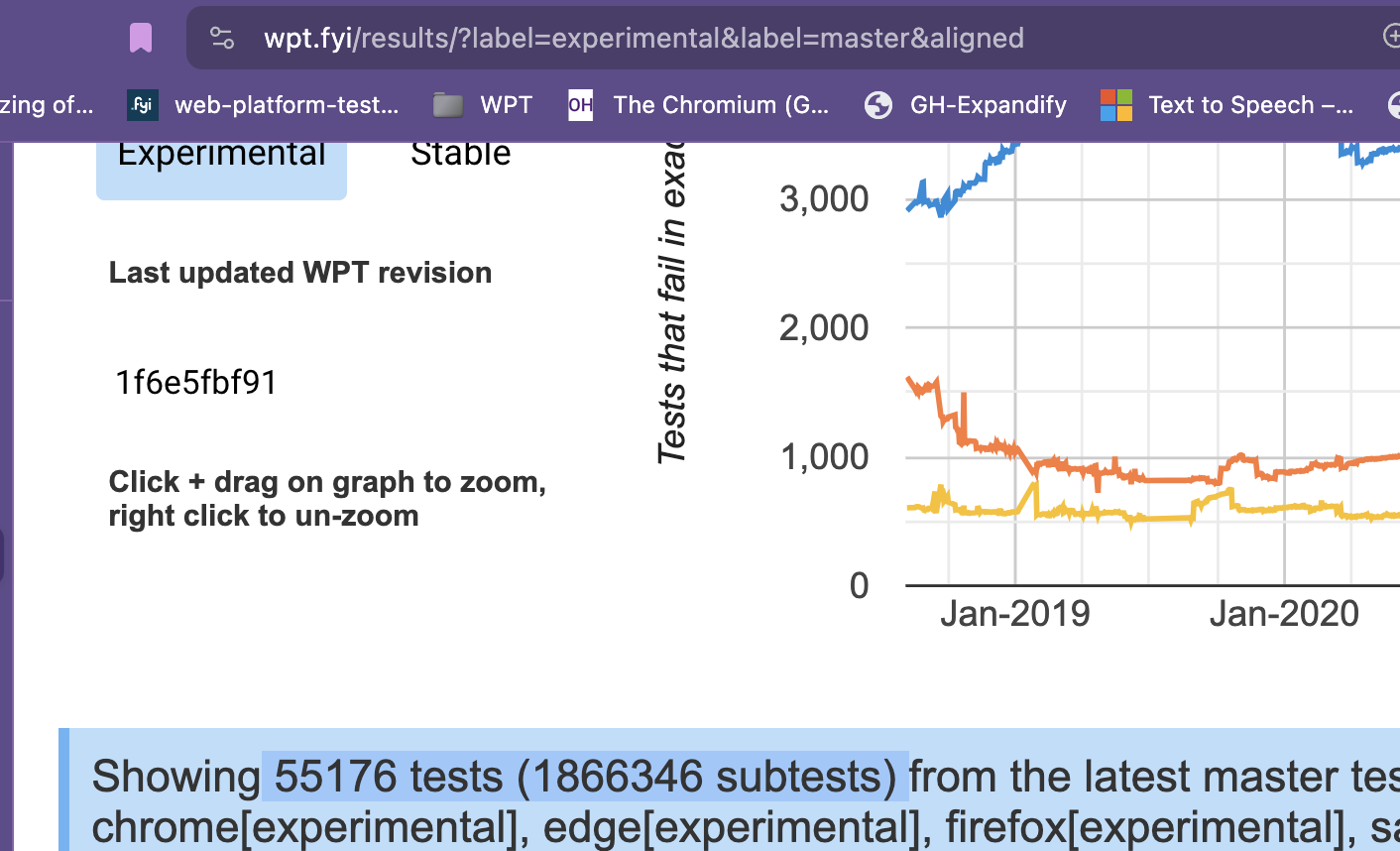

Last week, my work on implementing MessageFormat 2.0 in C++ was released

in ICU 75,

the latest release of the International Components for Unicode library.

As a compiler engineer, when I learned about MessageFormat 2.0,

I began to see it as a programming language, albeit an unconventional one.

The message formatter is analogous to an interpreter for a programming language.

I was surprised to discover a programming language hiding in

this unexpected place.

Understanding my surprise requires some context:

first of all, what "messages" are.

A story about message formatting

Over the past 40 to 50 years, user interfaces (UIs) have grown increasingly complex.

As interfaces have become more interactive and dynamic,

the process of making them accessible in a multitude of natural languages

has increased in complexity as well.

Internationalization (i18n) refers to this general practice,

while the process of localization (l10n) refers to the act

of modifying a specific system for a specific natural language.

i18n history

Localization of user interfaces (both command-line and graphical)

began by translating strings embedded in code that implements UIs.

Those strings are called "messages".

For example, consider a text-based adventure game:

messages like "It is pitch black. You are likely to be eaten by a grue."

might appear anywhere in the code. As a slight improvement, the messages could all be stored

in a separate "resource file" that is selected based on the user's locale.

The ad hoc approaches to translating these messages and integrating them into code

didn't scale well. In the late 1980s, C introduced a gettext() function into glibc,

which was never standardized but was widely adopted.

This function primarily provided string replacement functionality.

While it was limited, it inspired the work that followed.

Microsoft and Apple operating systems had more powerful i18n support

during this time, but that's beyond the scope of this post.

The rise of the Web required increased flexibility. Initially, static documents and apps

with mostly static content dominated. Java, PHP, and Ruby on Rails all brought

more dynamic content to the web, and developers using these languages

needed to tinker more with message formatting.

Their applications needed to handle not just static strings, but messages

that could be customized to dynamic content in a way that produced

understandable and grammatically correct messages for the target audience.

MessageFormat arose as one solution to this problem, implemented by

Taligent (along with

other i18n functionality).

The creators of Java had an interest in making Java the preferred language

for implementing Web applications, so

Sun Microsystems incorporated Taligent's work into Java in JDK 1.1 (1997).

This version of MessageFormat

supported pattern strings, formatting basic types, and choice format.

The MessageFormat implementation was then incorporated into

ICU,

the widely used library that implements internationalization functions.

Initially, Taligent and IBM (which was one of Taligent's parent companies)

worked with Sun to maintain parallel i18n implementations in ICU and

in the JDK, but eventually the two implementations diverged.

Moving at a faster pace, ICU added plural selection to MessageFormat

and expanded other capabilities,

based on feedback from developers and translators

who had worked with the previous generations of localization tools.

ICU also added a C++ port of this Java-based implementation;

hence, the main ICU project includes ICU4J (implemented in Java)

and ICU4C (implemented in C++ with some C APIs).

Since ICU MessageFormat was introduced, many much more complex Web UIs,

largely based on JavaScript, have appeared. Complex programs

can generate much more interesting content, which implies

more complex localization. This necessitates a different model

of message formatting. An update to MessageFormat has been

in the works at least since 2019, and that work culminates with the

recent release of the MessageFormat 2.0 specification.

The goals are to provide more modularity and extensibility, and easier adaptation for different locales.

For brevity, we will refer to ICU MessageFormat, otherwise known

simply as "MessageFormat", as "MF1" throughout.

Likewise, "MF2" refers to MessageFormat 2.0.

The work described in this post is motivated

by the desire to make it as easy as possible

to write software that is accessible to people

regardless of the language(s) they use to communicate.

But message formatting is needed even in user interfaces

that only support a single natural language.

Consider this C code, where number is presumed

to be an unsigned integer variable that's

already been defined:

printf("You have %u files", number);

We've probably all used applications that informed us

that we "have 1 files". While it's probably clear

what that means, why should human readers have to mentally

correct the grammatical errors of a computer?

A programmer trying to improve things might write:

printf("You have %u file%s", number, number == 1 ? "" : "s");

which handles the case where n is 1. However, English

is full of irregular plurals: consider "bunny" and "bunnies",

"life" and "lives", or "mouse" and "mice". The code

would be easier to read and maintain if it

was easier to express "print the plural of 'file'",

instead of a conditional expression that must

be scrutinized for its meaning.

While "printf" is short for "print formatted", its

formatting is limited to simpler tasks like

printing numbers as strings, not complex tasks like

pluralizing "mouse".

This is just one example; another example is messages

including ordinals, like "1st", "2nd", or "3rd",

where the suffix (like "st") is determined in a

complicated way from the number (consider "11th"

versus "21st").

We've seen how messages can vary based on user input

in non-local ways: the bug in "You have %u files"

is that not only does the number of files vary,

so does the word "files".

So you might begin to see the value of

a specialized notation for expressing such messages,

which would make it easier to notice bugs like

the "1 files" bug and even prevent them in the first place.

That notation might even resemble

a domain-specific language.

Turning now to translation,

you might be wondering why you would need a separate message formatter,

instead of writing code in your programming language of choice that

would look something like this (if your language of choice is C/C++):

printf("There was %s on planet %d", what, planet);

Here, the message is the first argument to printf and the other

arguments are substituted into the message at runtime; no special

message formatting functionality is used, beyond what the C standard

library offers.

The problem is that if you want to translate the words in the message,

translation may require changing the code,

not just the text of the message.

Suppose that in the target language, we needed to write the equivalent of:

printf("On planet %d, there was a %s", what, planet);

Oops! We've reordered the text, but not the other arguments to printf.

When using a modern C/C++ compiler,

this would be a compile-time error (since what is a string and not an integer).

But it's easy to imagine similar cases where both arguments are strings, and

a nonsense message would result. Message formatters are necessary not only

for resilience to errors, but also for division of labor:

people building systems want to decouple the tasks of programming and translation.

In search of a better tool for the job, we turn to

one of many tools for formatting messages: ICU MessageFormat (MF1).

According to the ICU4C API documentation,

"MessageFormat prepares strings for display to users,

with optional arguments (variables/placeholders)".

The MF2 working group describes MF1 more succinctly as "An i18n-aware printf, basically."

Here's an expanded version of the previous printf example,

expressed in MF1 (and taken from the "Usage Information" of the API docs):

"At {1,time,::jmm} on {1,date,::dMMMM}, there was {2} on planet {0,number}."

Typically, the task of creating a message string like this is done by a software engineer,

perhaps one who specializes in localization. The work of translating the words in the message

from one natural language to another is done by a translator,

who is not assumed to also be a software developer.

The designers of MF1 made it clear in the syntax what content needs to be translated,

and what doesn't. Any text occurring inside curly braces is non-translatable:

for example, the string "number" in the last set of curly braces

doesn't mean the word "number", but rather,

is a directive that the value of argument 0 should be formatted as a number.

This makes it easy for both developers and translators to work with a message.

In MF1, a piece of text delimited by curly braces is called a "placeholder".

The numbers inside placeholders represent arguments provided at runtime.

(Arguments can be specified either by number or name.)

Conceptually, time, date, and number are formatting functions,

which take an argument and an optional "style" (like ::jmm and ::dMMMM

in this example). MF1 has a fixed set of these functions.

So {1,time,::jmm} should be read as "Format argument 1 as a time value,

using the format specified by the string ::jmm". (The details of

how the format strings work aren't important for this explanation.)

Since {2} has no formatting function specified,

the value of argument 2 is formatted based on its type.

(From context, we can suppose it has a string type,

but we would need to look at the arguments to the message to know for sure.)

The set of types that can be formatted

in this way is fixed (users can't add new types of their own).

For brevity, I won't show how to provide the arguments that are substituted

for the numbers 0, 1 and 2 in the message; the API documentation shows

the C++ code to create those arguments.

MF1 addresses the reordering issue we noticed in the printf example.

As long as they don't change what's inside the placeholders,

a translator doesn't have to worry that their translation will

disturb the relationship between placeholders and arguments.

Likewise, a developer doesn't have to worry about manually

changing the order of arguments to reflect a translation of

the message. The placeholder {2} means the same thing

regardless of where it appears in the message.

(Using named rather than positional arguments would

make this point even clearer.)

(The ICU User Guide contains more documentation on MF1.

A tutorial

by Mohammad Ashour also contains some useful information on MF1.)

To summarize a few of the shortcomings of MF1:

- Lack of modularity: the set of formatters (mostly for numbers, dates, and times)

is fixed. There's no way to add your own.

- Like formatting, selection (choosing a different pattern

based on the runtime contents of one or more arguments) is not customizable.

It can only be done either based on plural form, or literal string matching.

This makes it hard to express

the grammatical structures of various human languages.

- No separate data model: the abstract syntax is concrete syntax.

- There is no way to declare a local variable so that the same piece

of a message can be re-used without repeating it;

all variables are external arguments.

- Baggage: extending the existing spec with different syntax could

change the meaning of existing messages, so a new spec is needed.

(MF1 was not designed for forward-compatibility, and the new spec

is backward-incompatible.)

MF2 represents the best efforts of a number of experts in the field

to address these shortcomings and others.

I hope the brief history I gave shows

how much work, by many tech workers, has gone into the problem

of message formatting.

Synthesizing the lessons of the past few decades into one standard

has taken years, with seemingly small details provoking

nuanced discussions.

What follows may seem complex, but

the complexity is inherent in the problem space that it addresses. The plethora

of different competing tools to address the same set of problems is evidence for that.

"Inherent is Latin for not your fault." -- Rich Hickey, "Simple Made Easy"

A simple example

Here's the same example in MF2 syntax:

At time {$time :datetime hour=numeric minute=|2-digit|}, on {$time :datetime day=numeric month=long},

there was {$what} on planet {$planet :number}

As in MF1, placeholders are enclosed in curly braces. Variables are prefixed with a $;

since there are no local bindings for time, what, or planet, they

are treated as external arguments. Although variable names are already

delimited by curly braces, the $ prefix helps make it even clearer

that variable names should not be translated.

Function names are now prefixed by a :, and options (like hour) are named.

There can be multiple options, not just one as in MF1.

This is a loose translation of the MF1 version, since in MF2,

the skeleton option is not part

of the alpha version of the spec.

(It is possible to write a custom function that takes this option,

and it may be added in the future as part of the built-in datetime function.)

Instead, the :datetime formatter can take a variety of

field options (shown in the example) or style options (not shown)

The full options are specified in

the Default Registry

portion of the spec.

Literal strings that occur within placeholders, like 2-digit, are quoted.

The MF2 syntax for quoting a literal is to enclose it

in vertical bars (|).

The vertical bars are optional in most cases,

but are necessary for the literal 2-digit because it includes a hyphen.

This syntax was chosen instead of literal quotation marks (") because

some use cases for MF2 messages require embedding them in a file format

that assigns meaning to quotation marks.

Vertical bars are less commonly used in this way.

A shorter version of this example is:

At time {$time :time}, on {$time :date}, there was {$what} on planet {$planet :number}

:time formats the time portion of its operand with default options,

and likewise for :date.

Implementations may differ on how options are interpreted

and their default values.

Thus, the formatted output of this version may be slightly

different from that of the first version.

A more complex example: selection and custom functions

So far, the examples are single messages that contain

placeholders that vary based on runtime input.

But sometimes, the translatable text in a message

also depends on runtime input.

A common example is pluralization: in English, consider

"You have one item" versus "You have 5 items."

We can call these different strings "variants"

of the same message.

While you can imagine code that selects the right variant

with a conditional, that violates the separation

between code and data that we previously discussed.

Another motivator for variants is grammatical case.

English has a fairly simple case system (consider

"She told me" versus "I told her";

the "she" of the first sentence changes to "her"

when that pronoun is the object of the transitive verb

"tell".)

Some languages have much more complex case systems.

For example, Russian

has six grammatical cases; Basque, Estonian, and Finnish

all have more than ten.

The complexity of translating messages into and between

languages like these is further motivation for organizing

all the variants of a message together.

The overall goal is to make messages as self-contained as possible,

so that changing them doesn't require changing the code

that manipulates them. MF2 makes that possible in more situations.

While MF1 supports selection based on plural categories,

MF2 also supports a general, customizable form of selection.

Here's a very simple example

(necessarily simple since it's in English) of using custom selectors

in MF2 to express grammatical case:

.match {$userName :hasCase}

vocative {{Hello, {$userName :person case=vocative}!}}

accusative {{Please welcome {$userName :person case=accusative}!}}

* {{Hello!}}

The keyword .match designates the beginning of a matcher.

How to read a matcher

Although MF2 is declarative,

you can read this example imperatively as follows:

- Apply

:hasCase to the runtime value of $userName to get

a string c representing a grammatical case.

- Compare

c to each of the keys in the three variants

("vocative", "accusative", and the wildcard "*", which

matches any string.)

- Take the matching variant

v and format its pattern.

This example assumes that the runtime value of the argument $userName

is a structure whose grammatical case can be determined.

This example also assumes that custom :hasCase and :person functions

have been defined (the details of how those functions are defined

are outside the scope of this post).

In this example, :person is a formatting function,

like :datetime in the simpler example. :hasCase is a different

kind of function: a selector function, which may extract

a field from its argument or do arbitrarily complicated computation

to determine a value to be matched against.

In general: a matcher includes one or more selectors and one or more

variants. A variant includes a list of keys, whose length

must equal the number of selectors in the matcher; and a single

pattern.

In this example, the selector of the matcher is

the placeholder {$userName :hasCase}. Selectors appear between

the .match keyword and the beginning of the first variant.

There are three variants, each of which has a single key.

The strings delimited by double sets of curly braces are patterns,

which in turn contain other placeholders. The selectors are used

to select a pattern based on whether the runtime value of

the selector matches one of the keys.

Supposing that :hasCase maps the value of $userName

onto "vocative", the formatted pattern consists of the

string "Hello, " concatenated with the result of formatting

this placeholder:

{$userName :person case=vocative}

concatenated with the string "!". (This also supposes that we

are formatting to a single string result; future versions of MF2

may also support formatting to a list of "parts", in which case

the result strings would be returned in a more complicated

data structure, not concatenated.)

You can read this placeholder like a function call with arguments:

"Call the :person function on the value of $userName with an

additional named argument, the name "case" bound to the string

"vocative". We also elide the details of :person, but you

can suppose it uses additional fields in $userName to format

it as a personal name.

Such fields might include a title, first name, and last name

(incidentally, see

"Falsehoods Programmers Believe About Names"

for why formatting personal names is more complicated than

you might think.)

Note that MF2 provides no constructs that mutate variables.

Once a variable is bound, its value doesn't change.

Moreover, built-in functions don't have side effects,

so as long as custom functions are written in a reasonable way

(that is, without side effects that cross the boundary

between MF2 and function execution), MF2 has no side effects.

That means that $userName represents the

same value when it appears in the selector and when it appears

in any of the patterns. Conceptually, :hasCase returns a result

that is used for selection; it doesn't change what the name

$userName is bound to.

Abstraction over function implementations

A developer could plug in an implementation of :hasCase that requires

its argument to be an object or record that contains grammatical

metadata, and then simply returns one of the fields of this object.

Or we could plug in an implementation that can accept a string

and uses a dictionary for the target language to guess its case.

The message is structured the same way regardless, though

to make it work as expected, the structure of the arguments

must match the expectations of whatever functions consume them.

Effectively, the message is parameterized over the meanings

of its arguments and over the meanings of any custom functions

it uses. This parameterization is a key feature of MF2.

Summing up

This example couldn't be expressed in MF1, since MF1 has no custom functions.

The checking for different grammatical cases would be done in

the underlying programming language, with ad hoc code for selecting

between the different strings. This would be error-prone,

and would force a code change whenever a message changes

(just as in the simple example shown previously).

MF1 does have an equivalent of .match that supports a few specific

kinds of matching, like plural matching. In MF2, the ability to write

custom selector functions allows for much richer matching.

Further reading (or watching)

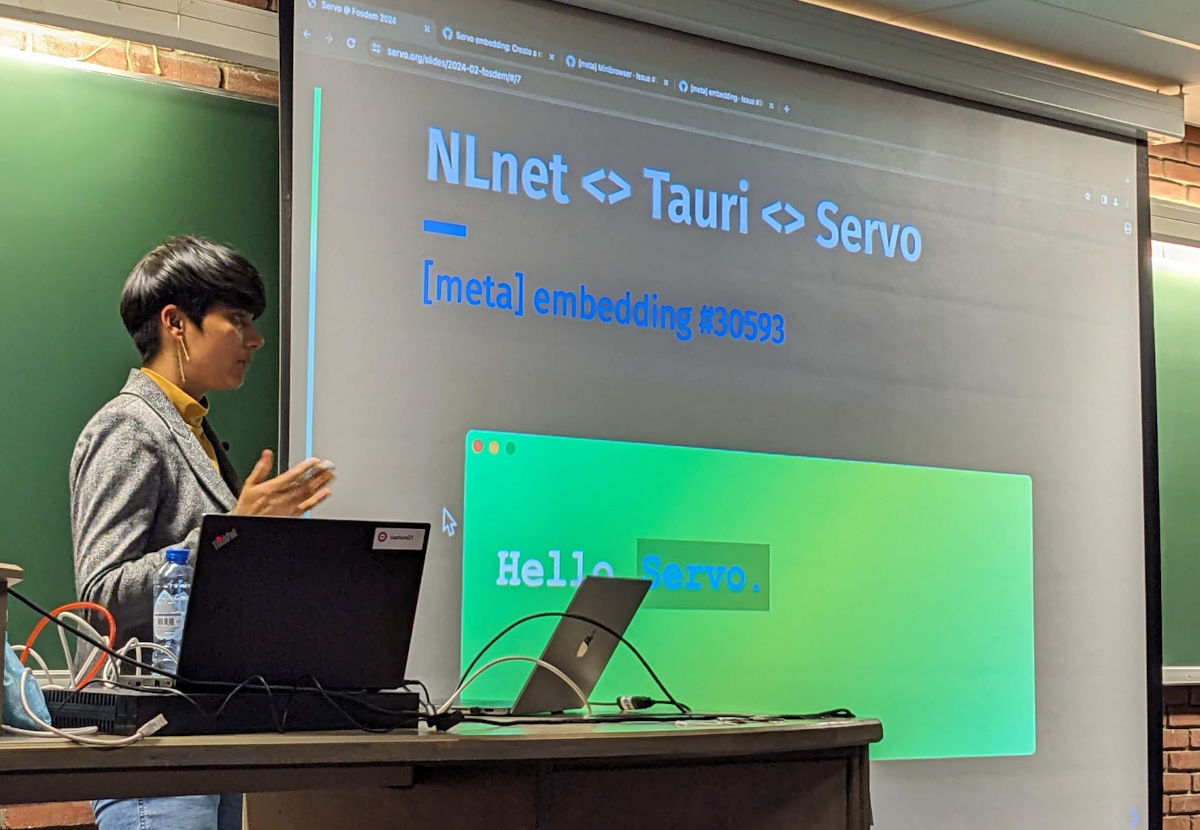

For more background both on message formatting in general

and on MF2, I recommend my teammate Ujjwal Sharma's talk at FOSDEM 2024,

on which portions of this post are based.

A recent MessageFormat 2 open house talk

by Addison Phillips and Elango Cheran also provides some great context and motivation

for why a new standard is needed.

You can also read a detailed argument in favor of a new standard

by visiting the spec repository on GitHub: "Why MessageFormat needs a successor".

Igalia's work on MF2, or: Who are we and what are we doing here?

So far, I've provided a very brief overview of the syntax and semantics of MF2.

More examples will be provided via links in the next post.

A question I haven't answered is why this post is on the Igalia

compilers team blog.

I'm a member of the Igalia compilers team; together with

Ujjwal Sharma, I have been collaborating with

the MF2 working group,

a subgroup of the Unicode CLDR technical committee.

The working group is chaired by Addison Phillips

and has many other members and contributors.

Part of our work on the compilers team has been

to implement the MF2 specification as a C++ API in ICU.

(Mihai Niță at Google, also a member of the working group,

implemented the specification as a Java API in ICU.)

So why would the compilers team work on internationalization?

Part of our work as a team is to introduce and refine proposals

for new JavaScript (JS) language features

and work with TC39, the JS standards committee, to advance these proposals

with the goal of inclusion in the official JS specification.

One such proposal that the compilers team has been involved with is

the Intl.MessageFormat proposal.

The implementation of MessageFormat 2 in ICU provides support for browser engines

(the major ones are implemented in C++) to implement this proposal.

Prototype implementations are part of the TC39 proposal process.

But there's another reason: as I hinted at the beginning,

the more I learned about MF2, the more I began to see it as

a programming language, at least a domain-specific language.

In the next post on this blog, I'll talk about the implementation work

that Igalia has done on MF2 and reflect on that work from

a programming language design perspective. Stay tuned!